AtomXR: Create XR experiences through natural language

Incubating a no-code XR development tool for designers empowered by AI

Date

2023

Duration

4 months

Project Type

XR AI UX Design and prototype

Keywords

Unity, GPT-3.5, MRTK, generative AI

Role

Lead design, lead prototyping, business dev

Team

Alice Cai, Caine Ardayfio, AnhPhu Nguyen, Aria Xiying Bao, Lucas Couto

Overview

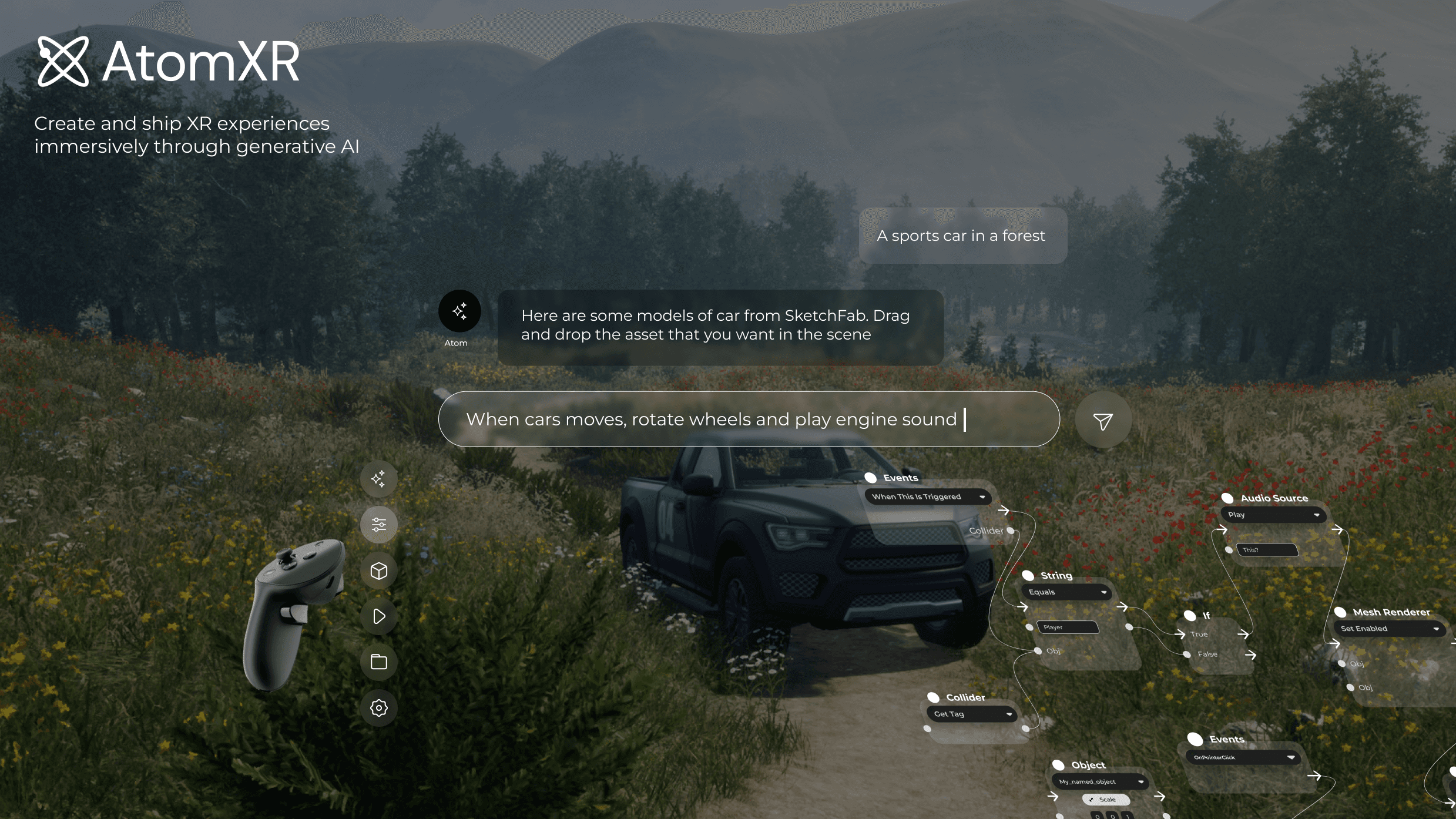

AtomXR is a no-code XR development tool driven by natural language. It leverages AI in the form of LLMs to create application logic in real-time in-headset. AtomXR started as a research project with a proof-of-concept demo, and later became a startup incubation where we defined an entirely new immersive interface that combines natural language and node-based editing.

Problem Space

The XR ecosystem has a supply problem. It's difficult to prototype and develop XR applications today and there are not enough XR content to draw consumers in as a result. In traditional 2D development workflows, designers can create high-fidelity prototypes without code, ensuring a frictionless hand-off to engineers. In XR, it's difficult to show high-fidelity designs without game engines like Unity or professional 3D animation tools, both of which have high learning curves. As a result, there is a big drop-off from XR designs to XR prototypes.

With the development of LLMs like GPT-3.5, the XR industry has the opportunity to bypass the learning curve of coding altogether via natural language and voice interaction.

Opportunity

💡

How might we use generative AI to bridge the gap between XR design and prototypes?

Solution

User Experience

Chat Interface

From visual scripting to immersive scripting: a brand-new take on node-based editing

Natural language voice interaction makes it easy to create logic and assets in real-time. But what about editing? While voice can help, users desire a more precise way.

Node-based visual scripting is a powerful paradigm that allows us to create and edit logic without the burden of learning to code. Tools like Unity's Visual Scripting and Unreal Engine's Blueprints are widely used in the industry. Notably, a game/creator application called Dreams (on PlayStation 4) pushed this to the edge, allowing creators to make full-fledged games without any code.

We took inspiration of this paradigm and designed a simplified node-based scripting system in passthrough mixed reality — immersive visual scripting .

Anatomy of an immersive scripting node

Research Prototype (Proof-of-Concept)

Landing Website

Natural Language Editing Prototype

We later prototyped real-time editing capabilities in mixed reality using GPT-4o function calling.